Hi everyone,

Hope you’re doing good!

A few days ago, a client complained about a slowness in their SAP DB environment running on Oracle. DB version is 19.24.

I asked them to run eDB360.

With the eDB360 output in hand, I did notice something wrong:

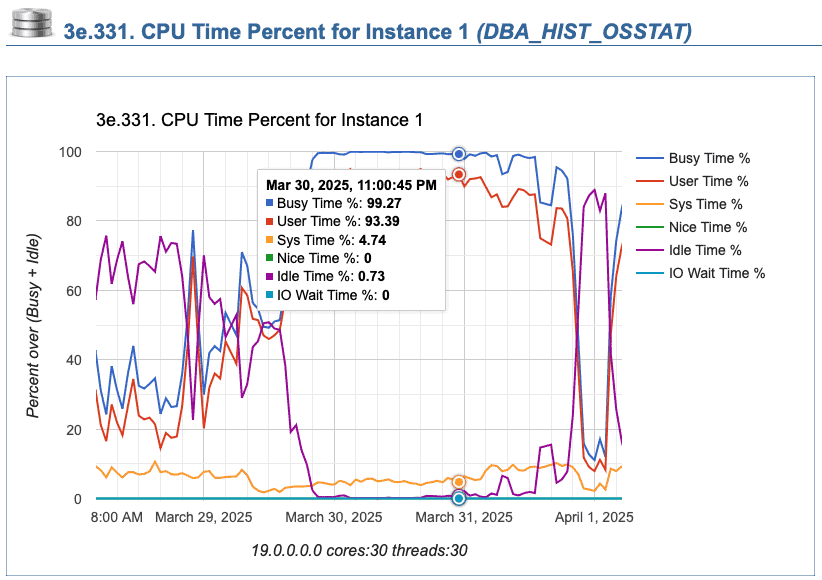

We can see that the Busy Time of CPU was 99%. So, all the CPU was in use.

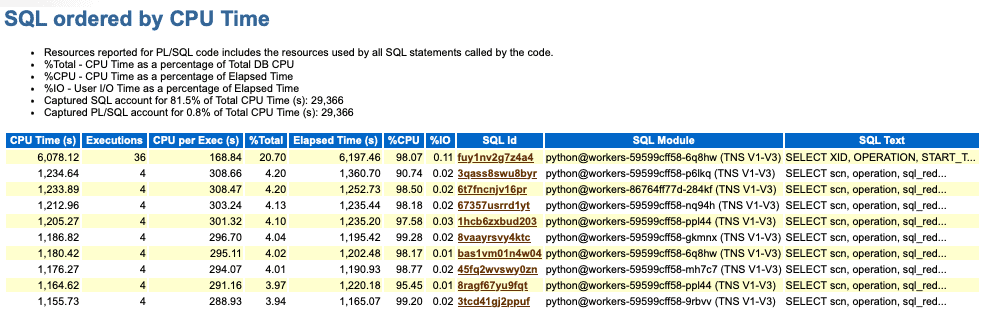

I also asked for an AWR report and noticed that:

We can clearly see that the SQL’s ordered by CPU came from the same module.

This client is performing CDC for some huge tables from their on-premises database to Google Cloud through DataStreams, to be consumed later for some BigQuery reports.

When the client configured their DataStreams streams, back 18 months ago, they used the only option available in the product at that moment to capture the changes on Oracle DB: LogMiner.

The client started replicating some set of tables, each set on its own stream. The main problem is, each stream is running one specific session for LogMiner, and LogMiner is a single-threaded process.

As reference, two of the SQLs listed above are listed below.

On the first one, we can see the query on V$LOGMNR_CONTENTS checking for the SQLs on two objects (two tables on the stream):

SELECT scn, operation, sql_redo, table_name, data_obj#, row_id, SYS_EXTRACT_UTC(FROM_TZ(CAST(timestamp AS TIMESTAMP), DBTIMEZONE)) AS timestamp, xid, csf, info, rollback, thread#, rs_id, ssn FROM V$LOGMNR_CONTENTS WHERE (DATA_OBJ# IN (492839, 491467)) AND OPERATION IN ('INSERT', 'DIRECT INSERT', 'UPDATE', 'DELETE', 'UNSUPPORTED', 'INTERNAL') AND (SEG_OWNER IS NULL OR SEG_OWNER NOT IN ('SYS', 'WKSYS', 'OWBSYS', 'CSMIG', 'SPATIAL_CSW_ADMIN_USR', 'ORDSYS', 'DEMO', 'OLAPSYS', 'APEX_040000', 'APEX_PUBLIC_USER', 'DSSYS', 'MDDATA', 'SI_INFORMTN_SCHEMA', 'DIP', 'AURORA$ORB$UNAUTHENTICATED', 'MGMT_VIEW', 'AWR_STAGE', 'OUTLN', 'XDB', 'DMSYS', 'ORACLE_OCM', 'DBSNMP', 'XS$NULL', 'TRACESVR', 'FLOWS_30000', 'CTXSYS', 'LBACSYS', 'SYSMAN', 'ORDPLUGINS', 'EXFSYS', 'WMSYS', 'WKPROXY', 'FLOWS_FILES', 'WK_TEST', 'MDSYS', 'TSMSYS', 'ANONYMOUS', 'PERFSTAT', 'SPATIAL_WFS_ADMIN_USR', 'AUDSYS', 'RDSADMIN', 'SYSTEM', 'GSMADMIN_INTERNAL', 'DBSFWUSER', 'APPQOSSYS'))On the second one, we can see the query on V$LOGMNR_CONTENTS checking for the SQLs on three objects (three tables on the stream):

SELECT scn, operation, sql_redo, table_name, data_obj#, row_id, timestamp + (current_date - sysdate) AS timestamp, xid, csf, info, rollback, thread#, rs_id, ssn FROM V$LOGMNR_CONTENTS WHERE (DATA_OBJ# IN (788735, 389117, 389127)) AND OPERATION IN ('INSERT', 'DIRECT INSERT', 'UPDATE', 'DELETE', 'UNSUPPORTED', 'INTERNAL') AND (SEG_OWNER IS NULL OR SEG_OWNER NOT IN ('SYS', 'WKSYS', 'OWBSYS', 'CSMIG', 'SPATIAL_CSW_ADMIN_USR', 'ORDSYS', 'DEMO', 'OLAPSYS', 'APEX_040000', 'APEX_PUBLIC_USER', 'DSSYS', 'MDDATA', 'SI_INFORMTN_SCHEMA', 'DIP', 'AURORA$ORB$UNAUTHENTICATED', 'MGMT_VIEW', 'AWR_STAGE', 'OUTLN', 'XDB', 'DMSYS', 'ORACLE_OCM', 'DBSNMP', 'XS$NULL', 'TRACESVR', 'FLOWS_30000', 'CTXSYS', 'LBACSYS', 'SYSMAN', 'ORDPLUGINS', 'EXFSYS', 'WMSYS', 'WKPROXY', 'FLOWS_FILES', 'WK_TEST', 'MDSYS', 'TSMSYS', 'ANONYMOUS', 'PERFSTAT', 'SPATIAL_WFS_ADMIN_USR', 'AUDSYS', 'RDSADMIN', 'SYSTEM', 'GSMADMIN_INTERNAL', 'DBSFWUSER', 'APPQOSSYS'))The client has 18 streams, and as I said, on each stream, they are replicating some set of tables. So, basically, the client has 18 sessions on DB performing actions using LogMiner, the content on V$LOGMNR_CONTENTS is exhibited at session level.

The below picture illustrates how this is working:

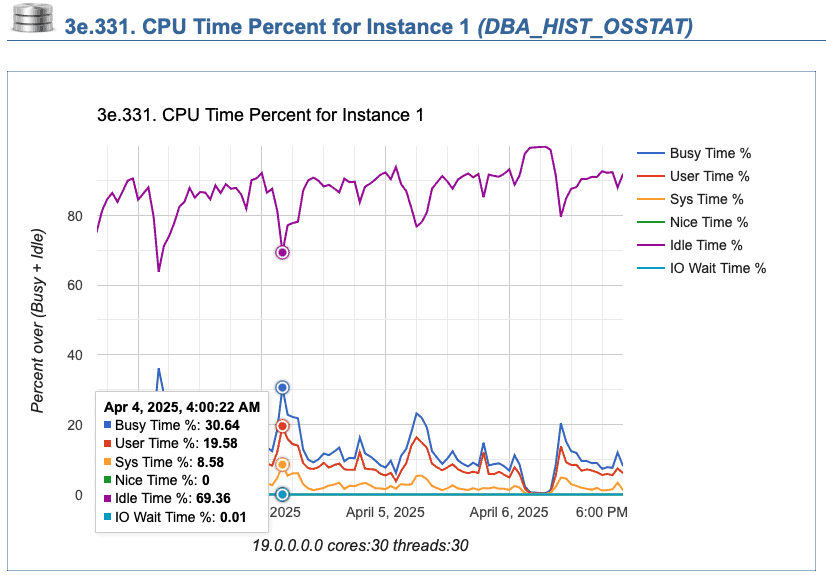

We asked client to stop the DataStream processes. After this, the percentage for the Busy Time came down abruptly: ~30%. Idle Time went to 69%, see the chart below:

We also can see the Average of Active Sessions:

Since December/2024, Google is now supporting Binary Log Reader for DataStreams. It’s still on Preview state, but will help to reduce the overhead in case clients have several streams running on their databases.

This blog post is talking about the issue client got using DataStreams, but please, note, that this was caused by several sessions of LogMiner. So, it does it not matter which software you are using for CDC, you need to be cautious about the scalability on your environment.

In our case, client agreed to:

- Move the Streams to consume data from DR;

- Create new Streams using the Binary Log Reader as long it’s fully available and supported (not on Preview).

Hope it helps!

Peace.